Analyzing Data with Kafka

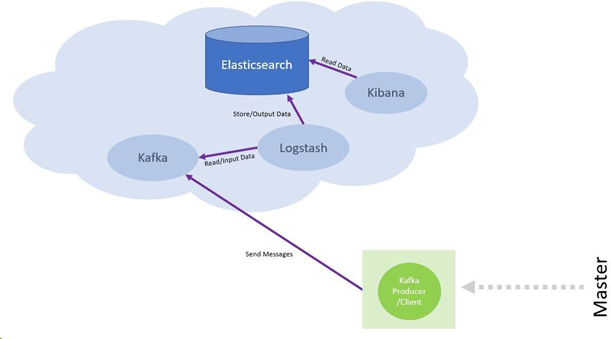

Apache Kafka is a distributed streaming platform that publishes streams of data records. APM Stream sends operational data from a TA Master to a Kafka cluster that stores the records in categories called topics. Raw data is then accessible from Kafka or third-party applications like Elastic’s Elastisearch, Logstash, and Kibana (ELK) to analyze and visualize the data.

APM Stream sends these types of data to Kafka:

-

Statuses of APM Stream jobs (APMJobStatus object)

-

Information about the TA Master queue (APMQueueHealthMessage object)

-

Master job virtual resources (APMJobResource object)

-

Jobs processed by agents and adapters (Connection Data object)

-

Fault monitoring events (FMLog object)

-

Status of a TA Master (MasterStatus object)

-

Performance of individual job queues (Queue object)

-

Statistical information about job performance (Statistics object)

-

System information about job activity (SystemActivityMessage object)

-

Events for SLA activity, tag activity, and critical path messages (APMEventNotification object)

-

Server resource and performance (APMServerResource object)

-

Changes to business data for compliance management (APMObjectChangeEvent object)

-

Changes to the schedule time zone (ScheduleTimeZone object)

-

Information about the APM Stream service (Service object)

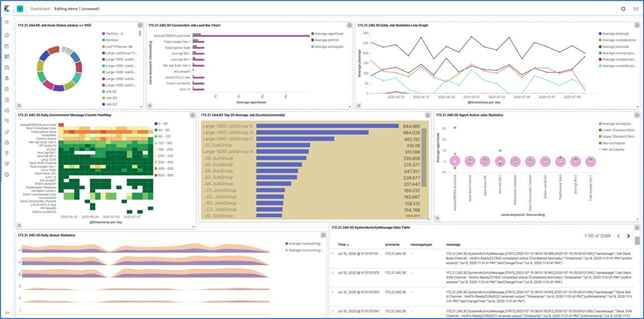

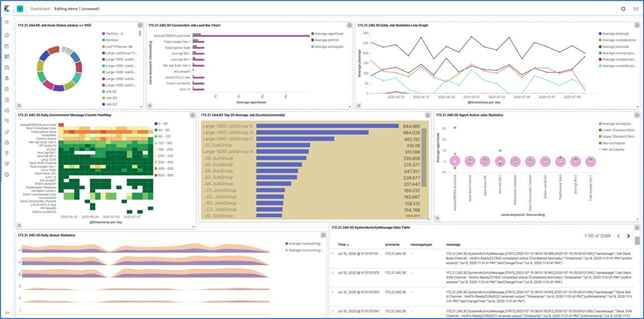

Use Kibana to display graphs for specific data objects and the contents of the data objects that APM Stream sends to Kafka. The illustration shows a graph of the APMJobStatus object.

Kibana dashboards can provide a broad picture of TA activity.